Preface

This document is a strategic nonproliferation analysis modeled after the IAEA’s State Evaluation Report (SER) format. Developed as part of an academic project, it assesses a specific country’s nuclear capabilities, incentives for proliferation, and potential safeguards challenges. The goal is to simulate real-world intelligence analysis and offer policy-relevant insights on nuclear risk and verification needs.

Introduction

Turkey occupies a unique strategic position at the crossroads of Europe and the Middle East, neighboring several current or former weapons of mass destruction (WMD)-proliferating states. As a longstanding NATO member under the US nuclear umbrella, Turkey’s security has historically relied on alliance commitments, including the stationing of an estimated 50 US B61 nuclear bombs at Incirlik Air Base. At the same time, Turkey has pursued nuclear energy ambitions for several decades as part of its economic growth and energy security strategy. Turkey is a Non-Nuclear-Weapon State party in good standing under the Nuclear Proliferation Treaty (NPT) and has been public in its support of nonproliferation norms. Occasional remarks made by Turkey’s leadership, however, have raised concerns about its long-term intentions. This research paper will provide a comprehensive analysis of Turkey’s nuclear energy development. It will survey Turkey’s nuclear program and infrastructure, examine potential incentives and pathways for proliferation, identify indicators of any deviation from peaceful commitments, and review verification mechanisms. The goal is to synthesize current information and offer a policy-relevant assessment of the proliferation risks associated with Turkey, in line with international nonproliferation frameworks.

State Profile and Nuclear Program

Background and Nuclear History

Turkey’s interest in nuclear technology dates to the 1950s with plans for nuclear power formulated as early as 1970. During the Cold War, Turkey’s role as a NATO frontline state against the Soviet Union emphasized its strategic importance, but nuclear weapons were supplied by the US under NATO sharing agreements rather than developed internally. Turkey established the Turkish Atomic Energy Authority (TAEK) in 1982 to supervise nuclear research and development (R&D). In the following decades, Turkey made several attempts to launch nuclear power projects, but these early bids were canceled or delayed due to financial, regulatory, and political hurdles. It wasn’t until the 2010s that Turkey’s nuclear power ambitions gained some traction, showcasing a high-level political push to reduce heavy dependence on imported energy and to nurture economic growth.

Nuclear Facilities and Fuel Cycle

Turkey doesn’t yet operate any nuclear power reactors, but construction is underway. The country’s first nuclear power plant, at Akkuyu on the Mediterranean coast, is being built by Russian state-owned Rosatom under a build, own, operate (BOO) model. The Akkuyu Nuclear Power Plant will consist of four VVER-1200 pressurized water reactors (4,800 Mwe total) with construction beginning in 2018, and Unit 1 expected online in 2025, with the remaining units coming online through 2028. A second plant was planned at Sinop on the Black Sea coast in partnership with a French Japanese consortium, but a 2018 feasibility study deemed the project’s cost and schedule unfeasible under the original terms. Since then, Turkey has explored other potential partners for Sinop, including more talks with Russia in late-2022 to possibly construct four reactors there. A third site at Igneada has also been under discussion with Chinese firms offering to build reactors using US-derived technology.

Beyond power reactors, Turkey’s nuclear infrastructure includes research and training reactors. A small TRIGA Mark-II research reactor (250 kW) has operated at Istanbul Technical University (ITU) since 1979. Another research reactor, the 5 MW TR-2 at the Cekmece Nuclear Research and Training Center near Istanbul, commissioned in 1981, was used for research and isotope production. The TR-2 originally ran on high-enriched uranium (HEU), but in 2009 was shut down to undergo conversion to low-enriched uranium (LEU) as part of nonproliferation efforts. The reactor’s HEU fuel was returned to the US in 2009, and Turkish authorities have since implemented safety upgrades; regulatory approval to restart TR-2 with LEU has been sought, with additional plans to resume operations to support research and isotope needs. These moves have eliminated weapons-grade HEU from Turkey, aligning with global minimization of civilian HEU. Aside from these reactors, Turkey doesn’t currently operate facilities for sensitive nuclear fuel-cycle processes like uranium enrichment or reprocessing, and it has no known capability to produce nuclear fuel indigenously. All fuel for future power reactors will be supplied through foreign partners (i.e., Rosatom for Akkuyu) under long-term contracts. The Akkuyu agreement includes a provision to establish a fuel fabrication plant in Turkey, which would enable local assembly of nuclear fuel, though the plant would still rely on important enriched uranium from Russia. Turkey has an estimated few thousand tonnes U of domestic uranium resources in central Anatolia; a modest supply. The Temrezli in-situ leach uranium mining project was explored by foreign firms, but the government revoked the licenses in 2018, stalling the project. In 2024, Turkey showed interest in securing uranium supply abroad, signing a cooperation pact with Niger to allow Turkish companies to explore Niger’s uranium mines. Turkish officials, including the foreign and energy ministers, visited Niger in mid-2024 seeking access to its high-grade uranium deposits. It’s these efforts that reflect Turkey’s desire to ensure fuel supply for its “nascent nuclear-power industry” and potentially to gain experience in the front end of the fuel cycle, though any moves toward indigenous enrichment remains a longer-term and scrutinized prospect (Sykes, P., Hoije, K., 2024).

Future Plans for Nuclear Energy

Looking forward, nuclear energy plays a central role in Turkish strategy to diversify its electricity mix and lessen dependence on imported natural gas and coal. The government’s current plans see three nuclear power plant sites in operation by the mid-20230s (Akkuyu, Sinop, and a third site) with a total of up to 12 reactor units (approx. 15 GWe capacity). As of December 2024, Akkuyu’s four units are under active construction with Rosatom financing and owning a majority stake. As for Sinop, Turkey has initially partnered with a Japanese French consortium (Mitsubishi Heavy Industries, Itochu, and EDF/Areva) to build ATMEA-1 reactors, but cost estimates ballooned (over $44 billion) leading to that consortium’s withdrawal in 2018. Turkey has since kept Sinop on the agenda, even courting Russia to take it over, but no final agreement has been reached. Meanwhile, China has emerged as a leading contender for the third Turkish plant with negotiations in mid-2023 involving Chinese state companies proposing to build reactors (possibly Hualong One designs) at Igneada in Thrace. A project like this might involve US-derived technology through China General Nuclear’s partnership with Western firms. The timeline for Sinop and Igneada projects remains uncertain as both depend on financing terms, technology selection, and Turkish political will to commit further resources. Still, President Erdogan has repeatedly affirmed Turkey’s intent to become a nuclear energy country, even stating an ambition for “three nuclear power plants by 2030” in public remarks. To build the necessary human capital, Turkey has sent forth hundreds of students abroad for nuclear engineering education. Since 2011, Rosatom has sponsored Turkish students at Russian universities to staff Akkuyu; as of 2025, dozens of Turkish graduates have earned nuclear engineering degrees in Russia and returned to work at the plant. Similar training initiatives exist with other partner countries, creating a pipeline of skilled personnel. While aimed at peaceful energy development, this growing base of nuclear expertise and infrastructure provides capabilities that could, under different political circumstances, be relevant to a weapons program. Later in the paper I will expand on dual use.

Nuclear Regulatory Framework

Turkey has recently overhauled its nuclear regulatory system to meet international standards as it works through nuclear power. Historically, the Turkish Atomic Energy Authority (TAEK) functioned as both a promoter and regulator of nuclear activities. In July 2018, Turkey created an independent Nuclear Regulatory Authority, or Nukleer Duzenleme Kurumu (NDK), transferring most of TAEK’s regulatory and licensing duties to this new body. The NDK regulates nuclear power plant safety, security, and all fuel cycle-related activities, issuing licenses and conducting inspections in line with IAEA guidelines. TAEK’s role was reduced to managing radioactive waste and decommissioning issues, and in 2020 TAEK was further consolidated into the Turkey Energy, Nuclear and Mining Research Institute (TENMAK). TENMAK now acts as the national R&D organization for nuclear science, energy, and mineral resources, inheriting TAEK’s research institutes. The Atomic Energy Commission (AEC), chaired by a high-level official, oversees all nuclear activities and advises the government on policy. Some other relevant bodies include the Ministry of Energy and Natural Resources (which sets energy policy) and the Energy Market Regulatory Authority (EMRA), which handles electricity market licensing and would approve electric generation licenses for nuclear plants. Turkey has also updated its nuclear liability and safety laws in line with international conventions, being a signatory of the Paris Convention on Third Party Liability for nuclear damage. Regarding nuclear security, Turkey has welcomed international peer reviews. The IAEA conducted International Physical Protection Advisory Service (IPPAS) missions in 2003 and 2021, which reviewed Turkey’s nuclear security regime. The 2021 mission noted Turkey’s adherence to IAEA nuclear security guidance and incorporation of the 2005 Amendment to the Convention on Physical Protection of Nuclear Material (CPPNM), which Turkey ratified in 2015. Overall, Turkey’s regulatory framework is being strengthened to support the safe expansion of nuclear energy, with clear separation of promotion (TENMAK) and regulation (NDK) functions as per international best practices. The framework provides the basis for ensuring that Turkey’s nuclear activities stay under effective control and exclusively peaceful.

Nonproliferation Treaty Obligations and International Commitments

Turkey has a long-standing commitment to global nonproliferation regimes. It became a party to the NPT as a non-nuclear-weapon state in 1979 and implemented a Comprehensive Safeguards Agreement with the IAEA in 1981. Under these safeguards, all nuclear material and facilities in Turkey are subject to IAEA monitoring to verify they are not used for weapons. Turkey was an early adopter of the IAEA Additional Protocol (AP), signing it in 2000 and putting it into effect in 2001. The AP grants the IAEA expanded rights of access and information, allowing for inspections of undeclared sites and verification of the absence of clandestine nuclear operations. Turkey’s implementation of the AP has allowed the IAEA to reach a broader conclusion since 2012 that Turkey has no undeclared nuclear material activities present. This provides confidence in Turkey’s compliance with its nonproliferation obligations. In addition to the NPT, Turkey also signed the Comprehensive Nuclear-Test-Ban Treaty (CTBT) in 1996, pledging not to conduct nuclear explosion tests. Turkey is also a party to international initiatives aimed at preventing WMD proliferation. It has been a member of the Nuclear Suppliers Group (NSG) since 2000, and of the Zangger Committee since the 1990s. These memberships commit Turkey to implement strict controls on exports of nuclear and dual-use materials, making sure they are not diverted to weapons programs. Likewise, Turkey joined the Missile Technology Control Regime (MTCR) in 1997 to curb the spread of ballistic missiles capable of delivering WMDs. As a chemical weapons possessor in the past, Turkey signed and ratified the Chemical Weapons Convention (CWC) and completed the destruction of its limited chemical stockpile, and it adheres to the Biological Weapons Convention (BWC) while no known biological programs exist in the country. Maybe most importantly, Turkey, like all UN member states, is bound by UN Security Council Resolution 1540, which requires national laws to prevent non-state actors from acquiring NBC weapons. Turkey had welcomed Resolution 1540 and submitted multiple national reports on its implementation, detailing measures such as export controls, border security, and criminalization of proliferation activities. Although Turkey is not a member of any formal nuclear-weapon-free zone, it has voiced support in international forums for the establishment of a WMD-free zone in the Middle East. Turkey’s stance has been that all countries in its region (including Israel and Iran) should forego WMD, aligning with its broader advocacy for disarmament and a fair nonproliferation regime.

To summarize, Turkey’s official posture is firmly embedded in the global nonproliferation regime: it has comprehensive IAEA safeguards and an Additional Protocol in force, and it participates in all major export control and nonproliferation initiatives. These obligations form a strong legal barrier to diversion of its booming nuclear energy program for non-peaceful uses. However, in the next section we will look at regional security context and Turkey’s evolving strategic calculus could, under some conditions, create incentives to reconsider these commitments.

Proliferation Pathways

Strategic Incentives for Nuclear Weapons

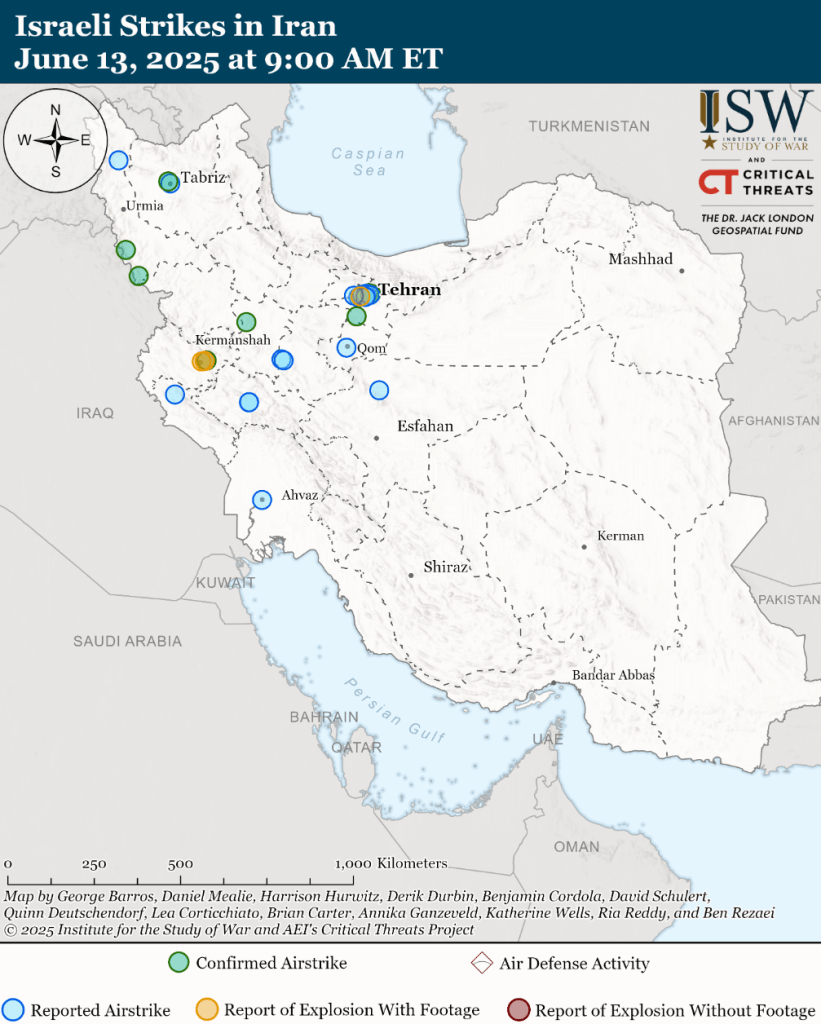

Under existing conditions, Turkey does not actively seek nuclear weapons. That said, analysts have identified several scenarios in which Turkey’s incentives could shift toward proliferation. The most cited trigger is a nuclear-armed Iran. Turkey and Iran are regional rivals balancing each other’s influence; if Iran were to openly acquire nuclear weapons or become a threshold nuclear state, Turkey could feel a heightened security threat and pressure to respond accordingly. The prospect of a nuclear Iran has already spurred debates in Turkey’s strategic community about Turkey’s vulnerability and the reliability of external protection. While NATOs nuclear umbrella currently covers Turkey, President Erdogan has voiced doubts about its long-term credibility, questioning whether it is acceptable that others are free to have nuclear-tipped missiles while Turkey cannot. This sentiment suggests a perceived inequity in the nonproliferation order and a desire for greater strategic autonomy. If Turkey’s confidence in NATO security guarantees diminishes, their leaders might reassess the costs and benefits of an independent deterrent. Calls to remove US nuclear weapons from Incirlik have increased in recent years. If those weapons were removed without an adequate alternative security arrangement, Turkey could perceive a deterrence gap.

Regional dynamics beyond Iran also play into Turkey’s strategic calculus. Turkey borders Syria and is in proximity to Israel; one a former proliferation and the other an undeclared nuclear state. Erdogan has rhetorically pointed to Israel’s nuclear arsenal as an unfair threat in the region, although Israel’s weapons have existed for decades and are likely not the primary driver for Turkey today. More relevant are Turkey’s great-power neighbors: Russia’s aggressive posturing in Ukraine and Syria and its nuclear saber-rattling unsettle the security environment. Although Russia is a partner of Turkey’s energy projects, their geopolitical interests diverge in places like Syria, the Caucasus, and the Black Sea. A nuclear capability could be seen by some Turkish strategists as an equalizer to deter a nuclear-armed Russia or to assert Turkey’s leadership in a multipolar Middle East. Additionally, domestic and prestige factors could serve as incentives. Under Erdogan’s administration, Turkey as embraces a narrative of “New Turkey” and neo-Ottoman strategic independence. Possessing advanced technology or even nuclear weapons can be viewed as a status symbol of great power. Some proliferation theories suggest countries may pursue nuclear weapons partly to bolster national pride or international standing. Erdogan’s 2019 statement, “there is no developed nation in the world that doesn’t have them”, shows a misconception but also possibly a prestige-driven itch: he compared nuclear armament with being a developed, powerful nation, implying Turkey should not be left behind. Domestically, pursuing nuclear weapons might rally nationalist support by asserting Turkey’s sovereignty against Western double standards, although it would conflict with Turkey’s international commitments and likely invite sanctions or isolation that most Turkish citizens would deem unacceptable.

In weighing these incentives, it is important to note that Turkey’s powerful military and bureaucratic establishment have historically prioritized alignment with NATO and adherence to the NPT. For decades now, the Turkish General Staff and diplomats were staunch defenders of nonproliferation, partly to maintain NATO cohesion and EU accession prospects. Turkey’s civil-military balance, however, has shifted under Erdogan, with civilian nationalist and assertive leadership consolidating control. If the political leadership decided a nuclear deterrent was necessary for national survival or prestige, domestic opposition from the traditional secular elite or military might not be as decisive a constraint as in the past. Still, any such decision would be fraught with risk, potentially jeopardizing Turkey’s security ties and economy. Most analysts assess Turkey is unlikely to go nuclear unless the strategic environment changes drastically; for example, if Iran openly crosses the nuclear threshold or the NATO security guarantee erodes beyond repair. Even in those cases, Turkey may first pursue middle options like developing latent capability or a civilian fuel cycle that hedges toward weapons before outright weaponization.

Potential Proliferation Pathways

If Turkey were to seek nuclear weapons, how could it technically proceed given its current capabilities and constraints? One pathway could be the uranium enrichment route. Turkey has significant experience with nuclear materials at the reactor level but currently lacks enrichment facilities. However, Turkey has consistently asserted its “right to enrich” under the NPT for peaceful purposes. In a proliferation scenario, Turkey may invoke an energy security rationale to establish an indigenous uranium enrichment program seemingly to fuel future power reactors. This could begin overtly as a small pilot enrichment facility under safeguards. An indicator of such intent was Turkey’s pursuit of raw uranium sources like Niger. Acquiring uranium ore is only logical if you plan to fabricate fuel or enrich it domestically rather than relying on foreign supply. A suspiciously timed deal for large quantities of uranium or the import of enrichment-related technology would set off alarms. Were Turkey to secretly acquire or build centrifuges, it might leverage foreign expertise. There is historical precent for illicit procurement networks using Turkey as a transit point. An example would be components for Pakistan’s AQ Khan network passed through Turkish companies in the early 2000s. Turkey could potentially seek external assistance for a weapons effort from allies like Pakistan, which has an established nuclear arsenal. Speculation exists that Pakistan and Turkey, sharing strong defense ties, may cooperate if Turkey decided to proliferate. There is currently no public evidence of any Pakistani commitment to aid a Turkish nuclear weapons program, and Pakistan would face intense international backlash if it openly transferred such technology. More likely, Turkey may try to indigenously develop the pieces of a fuel cycle. For example, this could include a covert centrifuge R&D project hidden within its civil nuclear research institutes. Turkey’s well-educated nuclear engineers could form the backbone of a secret program, though designing efficient centrifuges or obtaining high-strength materials in secret would be a significant challenge under trade surveillance.

Another pathway is the plutonium route, but this appears less practical for Turkey. Turkey’s power reactors at Akkuyu are light-water reactors under IAEA safeguards. Any diversion of spent fuel for plutonium reprocessing would likely be detected, and Turkey lacks a reprocessing plant. The acquisition or construction of a clandestine reprocessing facility would be tough to conceal. Turkey also has no heavy water reactors which produce bomb-suitable plutonium more efficiently; if it suddenly announced plans for a research reactor of the type that could yield significant plutonium, that would raise red flags. A theoretical scenario could involve Turkey repurposing its research reactor activities: for example, producing small quantities of plutonium in the TR-2 reactor’s fuel, which is now LEU, not ideal for weapons-grade plutonium production, and the reactor is small. This is an unlikely route given safeguards scrutiny and low output. A more dramatic approach would be for Turkey to obtain a complete weapon or some fissile material from outside the country. While not likely, I cannot entirely dismiss scenarios like stealing or seizing the US B61 bombs at Incirlik in a crisis. Those bombs, however, are under US control with Permissive Action Links and would be rendered unusable if seized; such actions would also damage US-Turkey relations and bring about global censure. Alternatively, Turkey could try to buy a weapon or fissile material on the black market. This, too, is remote given today’s monitoring and the lack of any known willing seller aside from North Korea, which Turkey would be extremely unlikely to engage.

A more subtle proliferation strategy for Turkey might by a nuclear hedge; developing nuclear latency without overt weaponization. This could involve the buildup of all components short of the bomb. These components could be a domestic enrichment capability, a stockpile of LEU, advances in missile delivery systems, and even civil nuclear naval propulsion research which could act as a loophole to withdraw material from safeguards as it uses highly enriched fuel. Turkey has already been building up its ballistic missile program, including the production of the Bora-1 (280km short-range ballistic missile (SRBM)), testing of the Tayfun missile (over 500km) in 2022, and plans to extend this to 1,000 km. While officially for conventional deterrence, such longer-range missiles could be adapted to deliver nuclear warheads in the future. Turkey’s pathway to a bomb, if it ever chose to pursue one, would likely begin with leveraging its civil nuclear program to acquire enrichment technology under seemingly legal pretenses, or less likely, turning to covert external procurement. Each path faces significant technical and political obstacles and would probably be detected before yielding a proper weapon. I will expand on this further in the next section.

Indicators and Verification Mechanisms

With Turkey’s extensive treaty commitments, any move toward nuclear weapons development would generate observable indicators detectable by international monitors or intelligence. Potential indicators of deviation from peaceful use include both changes in policy behavior and technical anomalies:

- Policy and legal indicators: An obvious indicator would be if Turkey’s government openly signaled intent to leave or undermine its nonproliferation obligations. For example, withdrawing from the NPT or the IAEA Safeguards Agreement would be an unmistakable warning and an escalatory step. Short of withdrawal, Turkey could cease implementation of the AP or refuse IAEA inspections that it previously accepted, on grounds of sovereignty or reciprocity. Such behavior would strongly suggest clandestine activity. Heightened nationalist rhetoric, like repeated presidential statements about the right to nuclear weapons or hints that Turkey might need its own deterrent if regional threats grow, would reinforce concerns. While Erdogan’s past remarks were one instance, a continuing pattern of such statements or inclusion of nuclear options in doctrinal discussions would indicate a policy shift.

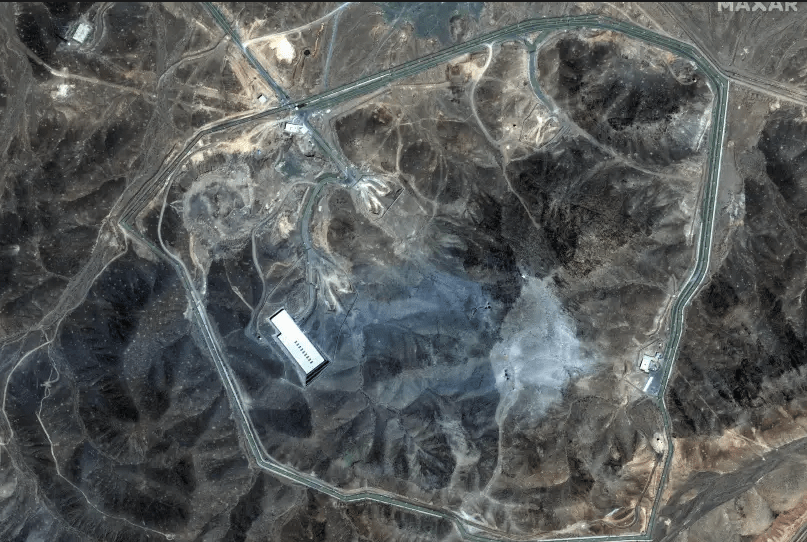

- Undeclared facilities and/or activities: On the more technical side, the emergence of any undeclared nuclear facility would be a red flag. Under the AP, Turkey must declare any new nuclear-related site. Discovery (through satellite imagery or other intelligence gathering methods) of a suspicious installation could indicate a secret enrichment plant. Additionally, construction of unusual scientific facilities like a heavy water production plant or a large radiochemistry lab that could handle plutonium with no clear civilian justification would raise alarms. Turkey’s extensive territory and tunneling expertise mean a covert site is not impossible, but it would be challenging to operate such a facility without detection in the long term, given overhead surveillance and the need to procure specialized equipment internationally. Analysts would scrutinize high-resolution satellite images for telltale signs such as security perimeters, ventilation stacks, waste streams at research sites, etc.

- Procurement anomalies: A more subtle sign of proliferation intent could be illicit procurement. If Turkish entities start seeking unusual dual-use materials or technology inconsistent with their known civilian programs, this would be a key indicator. Examples could include attempts to purchase high strength maraging steel, frequency converters, vacuum pumps, or ring magnets suitable for gas centrifuges, outside of normal channels. Turkey’s membership in NSG means it has pledged export controls, but procuring imports for itself may involve covert channels.

- Scientific and technical publications: Clues often emerge from the scientific community. If Turkish nuclear scientists begin publishing research on enrichment techniques, laser isotope separation, high-temperature plutonium chemistry, or warhead design physics, it might indicate state encouragement of expertise in weapons-relevant areas. Open-source analysts monitor publications and patent filings for such patterns. A historical parallel is how Iranian scientists’ papers on neutron initiators and uranium metallurgy were early giveaways of weapons-relevant R&D. For Turkey, any sudden surge in advanced nuclear fuel cycle research beyond what is needed for power reactor operation would be notable. The Turkish government’s tight control over research institutions might limit open publishing, but international collaborations or conference presentations could inadvertently reveal new focus areas.

- Other behavioral signs: Turkey might seek to harden or diversify its delivery systems as a precursor. Testing of longer-range missiles or developing indigenous satellite launch vehicles could be dual-use for nuclear delivery. Turkey’s pursuit of air and missile defense could also be seen as an effort to protect against Israel/Iran missiles in a world where nuclear deterrence factors in. While not a concrete indicator of proliferation, a heavy emphasis on ballistic missile capability combined with nuclear rhetoric would deepen suspicion.

To detect and respond to these indicators, the international community relies on a suite of verification systems and monitoring approaches. These include:

- IAEA safeguards and the AP: If Turkey remains under its current agreements, the IAEA is the first line of defense. The IAEA conducts regular inspections at declared facilities to verify that no nuclear material is diverted. Inventory checks and surveillance ensure that all enriched uranium and spent fuel is accounted for. Under the AP, the IAEA can request complementary access to any site, even non-nuclear sites, to investigate indications of nuclear related activities. As an example, inspectors can visit a university lab or industrial facility on short notice if they suspect nuclear material might be present. They may also carry out environmental sampling, swiping surfaces and air for traces of nuclear isotopes that might indicate clandestine work. Turkey’s broad cooperation has so far meant the IAEA has not reported any irregularities. If evidence arose, like foreign intelligence tips about a hidden lab, the IAEA could invoke a special inspection to clarify the situation, though this requires Board of Governors approval if the state resists.

- National and allied intelligence: NATO allies, particularly the US, maintain intelligence efforts regarding Turkey’s strategic programs. Signals intelligence (SIGINT) and human intelligence (HUMINT) could pick up conversations or orders related to secret nuclear activities. For example, communication with foreign suppliers about sensitive equipment or unusual military orders to prepare tunnels could be intercepted. Throughout the Iranian nuclear crisis, Western intelligence often uncovered facilities before the IAEA was informed. A similar watch on Turkey would likely reveal early moves toward weaponization. Turkey’s integration in Western defense networks might make covert activities harder to hide from its allies. If such intel were obtained, allies would most likely approach Turkey privately at first, and if concerns continued, raise the issue at the IAEA Board or UN Security Council.

- Open-source and non-governmental monitoring: In today’s information age, independent researchers and NGOs play an important role. High-resolution commercial satellite imagery is readily available; think tanks like the Institute for Science and International Security or Turkey’s own EDAM could analyze new construction. If a large building pops up at the Kucukcekmece Nuclear Research Center, for example, with no declared purpose, analysts will likely flag it. Turkey’s media and academia may also leak information if scientists are reassigned to secret projects or if there’s an unexplained budget surge for a strategic program. Despite political pressures, Turkey maintains a varied press environment where some investigative journalists continue to pursue sensitive military stories, unless national security laws silence them.

- International legal systems: If clear evidence of a proliferation attempt emerged, the issue would likely escalate to the UN Security Council (USNC) to authorize stronger verification or enforcement. The IAEA could refer Turkey to the UNSC for non-compliance, as it did with Iran in 2006, if Turkey were found breaching safeguards. The UNSC could then mandate more aggressive inspections or demand Turkey halt certain activities. In extreme cases, sanctions could be imposed to dissuade further progress. One tool could be a bespoke monitoring mechanism like the Joint Comprehensive Plan of Action (JCPOA) model used for Iran, involving extensive verification beyond the AP like continuous monitoring of centrifuge production. Reaching that stage, however, would indicate a severe breakdown of trust. Before it escalates that far, Turkey’s partners would likely exercise diplomatic pressure and offer incentives to keep Turkey within the nonproliferation fold.

There have been no signs to date that Turkey has undertaken any covert nuclear weapons-related work. The IAEA has continuously drawn the broader conclusion that all nuclear material in Turkey remains in peaceful use. Turkish transparency reinforces confidence in its compliance. That said, maintaining vigilance is prudent. The verification systems described ensure that if Turkey did ever pivot toward proliferation, it would most certainly face early detection and international intervention long before actual weaponization. This alone serves as a strong deterrent against any covert programs.

Conclusion

Turkey’s nuclear trajectory truly epitomizes the dual-use dilemma at the core of the nonproliferation regime where a country is pursuing a legitimate nuclear energy program while navigating a volatile security environment and harboring great power aspirations. My analysis finds that Turkey’s proliferation risk, at present, remains low as the country is deeply embedded in treaties like the NPT and relies on NATO security guarantees, giving it strong incentives to abstain from nuclear weapons. Turkey’s nuclear energy program is under strict international oversight, and recent steps show its commitment to purely peaceful use. Turkey’s unique regional posture, however, means its strategic calculus may change if the balance erodes. President Erdogan’s hits at the unfairness of the current order seems to suggest that Turkish restraint should not be taken for granted if proliferation cascades begin in the region.

From a policy perspective, a few measures can aid in keeping Turkey’s proliferation risk in check. First, sustaining NATOs assurances to Turkey is important since clear commitments and missile defense cooperation can mitigate the country’s security fears that might otherwise spur a nuclear option. The continued presence of NATO nuclear sharing serves as a material reminder that Turkey is protected, and allies should quietly engage Ankara on the role these weapons play and conditions under which their removal would be considered. Second, the international community should support Turkey’s civil nuclear program in such a way that minimizes proliferation-prone capabilities. This can include offering fuel supply guarantees, so Turkey feels no need to enrich uranium, and assisting with spent fuel management. Negotiating a fuel take-back agreement for Akkuyu’s spent fuel, for example, would remove stockpiles of plutonium-bearing material from Turkey. Additionally, encouraging Turkey to source fuel through multilateral frameworks or international fuel banks would reinforce the norm against national enrichment.

Third, robust diplomacy with Turkey regarding regional threats can address the root motivators. If Iran’s nuclear impasse worsens, involving Turkey in solutions will be important so that Turkey feels its security concerns are heard and managed collectively rather than having to fend for itself. Turkey has, in the past, played a role in diplomatic efforts, for instance, the 2010 Tehran fuel swap initiative with Brazil. Reintegrating Turkey as a constructive partner in nonproliferation initiatives, rather than a potential adversary, is the smarter play. Domestically, Turkey could be encouraged to continue demonstrating leadership in nonproliferation by ratifying the CTBT and actively participating in proposals for a Middle East WMD-Free Zone. These steps would bolster Turkey’s international image as a responsible stakeholder, countering any domestic narrative that might favor a weapons path.

Finally, the international community needs to maintain vigilant monitoring of Turkish nuclear activities, but in such a way as not to alienate or unjustly accuse. The existing verification tools are adequate, but should Turkey’s behavior change, then preemptive diplomacy is needed to address issues before mistrust spirals out of control. Open lines of communication between Turkish authorities and the IAEA will help clarify any technical questions, like informing the IAEA of any new nuclear research projects under AP declarations to avoid misconceptions.

To conclude, Turkey today presents a low proliferation risk and in many ways is a model of a non-nuclear-weapons state investing in nuclear power under proper safeguards. Its domestic regulatory reforms and international cooperation on nuclear security are positive indicators. The risk profile is not static, and it depends on geopolitical developments. The evolution of Iran’s nuclear program, the status of Turkey’s relations with the West, and the internal political shifts will all affect Turkey’s strategic choices. Proliferation in Turkey is not inevitable, nor is it likely in the near term, but it is conditional. By understanding those conditions and reinforcing the barriers, the international community can ensure that Turkey continues to find that the benefits of nonproliferation outweigh any perceived gains of developing a nuclear weapon. Keeping Turkey within the nonproliferation regime strengthens regional stability and upholds the integrity of a global norm that, as President Erdogan himself argued at the UN, should apply equally to all. In the end, Turkey’s case displays the importance of addressing the security and prestige concerns that drive proliferation, thereby preserving its role as a responsible actor in the pursuit of nuclear technology for peaceful purposes.

References

Ağbulut, Ü. (2019). Turkey’s electricity generation problem and nuclear energy policy. https://www.researchgate.net/publication/332099832_Turkey’s_electricity_generation_problem_and_nuclear_energy_policy

Akkuyu Nuclear. (2021). 43 Turkish specialists received higher education diplomas in nuclear power engineering. Akkuyu Nuclear. https://akkuyu.com/en/news/43-turkish-specialists-received-higher-education-diplomas-in-nuclear-power-engineering

Bureau of Nonproliferation. (2003). The Nuclear Suppliers Group (NSG). U.S. Department of State. https://2001-2009.state.gov/t/isn/rls/fs/3054.htm

Ciddi, S., & Stricker, A. (2025). FAQ: Is Turkey the next nuclear proliferant state? Foundation for Defense of Democracies. https://www.fdd.org/in_the_news/2025/02/05/faq-is-turkey-the-next-nuclear-proliferant-state

Gesellschaft für Anlagen- und Reaktorsicherheit (GRS). (2023). Nuclear energy in Turkey. https://www.grs.de/en/nuclear-energy-turkey-04072023

International Atomic Energy Agency. (2021). IAEA completes nuclear security advisory mission in Turkey. International Atomic Energy Agency. https://www.iaea.org/newscenter/pressreleases/iaea-completes-nuclear-security-advisory-mission-in-turkey

Jewell, J., & Ates, S. A. (n.d.). Introducing nuclear power in Turkey: A historic state strategy and future prospects. Energy Strategy Reviews. https://doi.org/10.1016/j.esr.2015.03.002

Landau, E., & Stein, S. (2019). Turkey’s nuclear motivation: Between NATO and regional aspirations. Institute for National Security Studies. https://www.inss.org.il/publication/turkeys-nuclear-motivation-between-nato-and-regional-aspirations

Nuclear Suppliers Group. (n.d.). Frequently asked questions. https://www.nuclearsuppliersgroup.org/index.php/en/resources/faq

Nuclear Threat Initiative. (n.d.). Turkey. NTI. https://www.nti.org/countries/turkey/

Republic of Türkiye Ministry of Foreign Affairs. (n.d.). Arms control and disarmament. https://www.mfa.gov.tr/arms-control-and-disarmament.en.mfa

Shokr, A., & Dixit, A. (2017). Improved safety at Turkey’s TR-2 research reactor: IAEA peer review mission concludes. International Atomic Energy Agency. https://www.iaea.org/newscenter/news/improved-safety-at-turkeys-tr-2-research-reactor-iaea-peer-review-mission-concludes

Sykes, P., & Höije, K. (2024). Turkey eyes Niger mining projects amid competition for uranium. Mining.com. https://www.mining.com/web/turkey-eyes-niger-mining-projects-amid-competition-for-uranium/

Turkish Minute. (2025, February 4). Turkey’s short-range Tayfun missile said to surpass 500-kilometer range in latest test. Turkish Minute. https://www.turkishminute.com/2025/02/04/turkeys-short-range-tayfun-missile-surpass-500-kilometer-range-latest-test

UNIDIR, VERTIC. (n.d.). Türkiye. Biological Weapons Convention National Implementation Measures Database. https://bwcimplementation.org/states/turkiye

United Nations. (2021). National report submitted by Turkey in accordance with article VIII of the Treaty on the Non-Proliferation of Nuclear Weapons (NPT/CONF.2020/E/37). https://www.un.org/sites/un2.un.org/files/2021/11/npt_conf.2020_e_37.pdf

Ülgen, S. (2010). Preventing the proliferation of WMD: What role for Turkey? Centre for Economics and Foreign Policy Studies (EDAM). https://edam.org.tr/wp-content/uploads/2010/06/Preventing-the-Proliferation-of-WMD-What-Role-for-Turkey.pdf

U.S. Government Accountability Office. (2005). Nuclear nonproliferation: IAEA has strengthened its safeguards and nuclear security programs, but weaknesses need to be addressed (GAO-06-93). https://www.gao.gov/assets/a248101.html

World Nuclear Association. (2024). Nuclear power in Turkey. https://world-nuclear.org/information-library/country-profiles/countries-t-z/turkey

![[Case Study] Turkey’s Nuclear Energy Development Proliferation Risk Profile](https://sploited.blog/wp-content/uploads/2025/07/an-intelligence-briefing-graphic-showing-satellite-imagery-of-nuclear-fuel-3.png?w=1024)

![[Deep Dive] Cyber Tactics and Counterterrorism Post-9/11](https://sploited.blog/wp-content/uploads/2025/05/create-a-featured-image-for-a-blog-post-focusing-on-3.png?w=1024)